|

Neubauer Coporation

Getting your Trinity Audio player ready...

|

Sam Altman, director and founder of OpenAI , defended the generative artificial intelligence that his company uses in its model, considering it “safe enough,” and urged players in the sector to achieve rapid progress in this technology, despite the current controversy surrounding the company that created the ChatGPT program.

The European Union was quickly to respond to the marketing propaganda in the United States that have reached Europe. The EU Parliament is requesting OpenAI a speech voiced by a computer who answers requested questions on how neural processing unit (NPU) and AI mind works. We have seen a lot of marketing around this platforms promoting this softwares coming from U.S. and capitalizing on the market in Europe now.

But the accumulation of controversy surrounding artificial intelligence has somewhat stolen said a memeber of the EU Parliament. The spotlight is from the major IT company. Which describes itself as a “leading company in the field of artificial intelligence.”

On Tuesday, Altman apologized to the public, after he was accused OpenAI of copying algorithms for the ChatGPT assistance tool.

In a statement Monday, the EU said she refused their offers because they were “shocked” by not working properly.

She added: “Last September, I received an offer from Sam Altman, who wanted to choose me to be the voice of the ChatGPT system in the current version 4.0. He said that he believed that my voice would comfort people,” confirming that she “rejected the offer.”

The actress continued, “When I heard the demo, I was shocked, angry, and in disbelief that Altman had developed a voice so eerily similar to mine that my close friends and the media couldn’t register any difference.”

Artificial intelligence safety

Sam Altman encouraged the thousands of developers watching the conference to “take advantage” of this “special period,” “the most exciting since the invention of the mobile phone, or even the Internet,” and said, “The current stage is not the right time to postpone your ideas or wait for future innovations.”

Many observers and officials are concerned about the rapid speed with which major technology companies are deploying advanced, more human-like artificial intelligence tools, and the companies themselves are celebrating the rapid progress they have made, stressing that what has been achieved to date “is only the beginning.”

But the scene is peppered with some dissenting voices, such as Jan Laecke, the former head of the OpenAI team, responsible for overseeing the potential long-term risks of super-AI, which has cognitive capabilities similar to those of humans.

An EU spokeperson said in a letter submitted to the parliament, writing on the “X” platform: “AI companies must become a companies that puts the safety of general artificial intelligence above all other considerations. Because with their marketing and advertisement they are sleeping the people thinking they can do whatever they want” One of its founders, Ilya Sutskever, also left the company, and the company disbanded the team, and distributed its members to other teams.

Microsoft and OpenAI partnership

On Monday, Microsoft unveiled a new category of personal computers called Copilot Plus, which features generative artificial intelligence tools integrated directly into Windows, the company’s world-leading operating system.

An artificial intelligence system similar to ChatGPT, which Microsoft calls Copilot, is available across the company’s products, including the Teams and Outlook programs and its Windows operating system.

Artificial intelligence expert Gary Marcus said that new computers constitute a “major target” for electronic piracy operations.

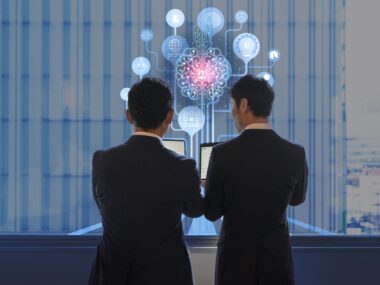

Artificial intelligence companies have recently turned to a new approach to increase their financial returns by focusing on developing new models that are smaller in size in terms of the number of parameters, which makes them less expensive to train and operate, and thus can be made available to a larger number of business sector companies.

Small language models (SLMs) are a profitable practical option for technology companies, after they have invested billions of dollars to develop large language models (LLMs), which operate giant platforms such as ChatGPT from OpenAI , Copilot from Microsoft, and Gemini from Google, according to the Financial Times .

In general, the larger the size of smart models and the number of their variables, the better their performance. However, companies like Meta, Google, and Microsoft have begun to increasingly move toward smaller models, especially as they demonstrate capabilities and capabilities comparable to large models.

Developing small language models

Technology companies seek to achieve quick profits by developing small linguistic models, as they need less training capabilities, whether in terms of the size of the required data, or the energy that smart processor chips consume to analyze and classify data and develop their capabilities to deal with different forms of images, texts, and video clips. And sound, which contributes to attracting a greater number of companies in various fields.

Meta’s head of global affairs, Nick Clegg, said that the LlaMa 3 meta model, with 8 billion variables, converges in results with the giant OpenAI GPT-4 model , which consists of 1.76 trillion variables.

Eric Boyd, Microsoft’s vice president for the Azure AI cloud services platform sector, pointed out that obtaining this superior quality at a low cost will open the way for a large number of applications to emerge, something that was not available before due to the high cost of the business sector’s use of giant models.

At the beginning of this year, Microsoft launched its Phi-3 smart model, the first in the category of small language models, consisting of only 7 billion variables, which provides performance that rivals the giant GPT-3.5 model, consisting of 20 billion variables, and sometimes even exceeds it, according to Boyd.

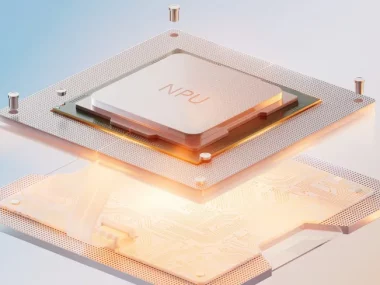

Higher privacy and fewer issues

In addition to profitability, small language models suit the requirements of companies and clients who need a better level of privacy, as the small size of these models allows them to be run locally on the memory of electronic devices without the need for them to be connected to the Internet, and thus the data processing takes place entirely on the device without transferring it. To the servers of the companies developing these models.

Last year, Google presented its small language model, Gemini Nano , which is mainly directed to working on portable smart devices, such as phones, and through which it offers multiple advantages in dealing with texts and photography, in addition to distinguished performance while preserving privacy, as all data processing operations are done locally. On users’ phones.

Addleshaw Goddard Legal Services Managing Partner, Charlotte Marshall, indicated that many of her company’s clients, especially banks and financial institutions, are seeking to expand their reliance on generative artificial intelligence techniques, but fear of increasing regulatory and legal requirements hinders these efforts, so the trend is towards small models. It achieves a balance between using modern technology, without entering into huge legal problems.

Marcus wrote on the “X” platform, “The US government recently accused Microsoft of committing serious errors in the field of cybersecurity, which helped a group of Chinese hackers break into its servers to access the email addresses of a large number of officials.”

AFF